My time at CameraForensics so far, from the challenges faced to the impact made

Chaz Hickman

23 May, 2024

Fred Lichtenstein and Shaunagh Downing

From the photorealism achieved by the generative video engine SORA to the continued popularity of image generators like Stable Diffusion and DALL-E, AI has inexorably become more powerful since our last quarterly AI review.

As a result, one recent initiative written by Thorn, All Tech Is Human, and others, aims to make generative AI safer for all.

The ‘Safety by Design’ pledge bakes safeguarding principles into the development, deployment, and maintenance of new AI tools. For example, ensuring that AI training data is free from illicit imagery is just one of the recommended principles defined in the full document.

It’s a commitment that has already been signed by some of the world’s foremost tech giants, signalling a global commitment to safer AI usage and development. As Thorn writes:

“Amazon, Anthropic, Civitai, Google, Meta, Metaphysic, Microsoft, Mistral AI, OpenAI, and Stability AI publicly committed to Safety by Design principles. These principles guard against the creation and spread of AI-generated child sexual abuse material (AIG-CSAM) and other sexual harms against children.”

We took the time to sit down with Data Scientist Fred Lichtenstein and Research and Development Engineer Shaunagh Downing to discuss the principles in more detail, exploring what it means for the AI landscape, the other challenges that need to be addressed to ensure an effective rollout, and what the future of safe AI content creation holds.

Shaunagh: Firstly, I need to express how impressed I am with these principles. I was lucky enough to help review and provide some feedback for these principles late last year, and it’s clear that a lot of effort went into them. The principles outlined are well-considered, promoting safer practices and offering valuable guidance for responsible AI development.

I believe that adhering strictly to everything outlined in the document could practically eliminate the possibility of creating AIG-CSAM (AI-generated child sexual abuse material).

Unfortunately, there are a multitude of AI tools already out there that weren’t developed with these principles in mind. If they had been, then maybe we wouldn’t have encountered the issues we discussed during our last quarterly AI review.

That said, it’s encouraging to witness so many tech giants committing to this pledge. It shows a promising step forward for responsible AI practices.

Fred: It’s impressive how Thorn has brought these huge companies together to sign such a comprehensive document. I admire how focused it is, specifically on combating CSAM. It’s not just about datasets, it’s really honed in on the key principles of developing these tools.

For me, what I find particularly intriguing is the reporting aspect. Looking at the principles, there’s a requirement to report any found images. What this will look like isn’t clear, but reporting is imperative to make change. In my opinion, the reporting will determine the success or failure of this initiative. Either way, it’s a clear step in the right direction.

Fred: Video content is making its mark. New tools mean that there’s even more accessible and realistic content that could potentially be more enticing to a wider audience. Of course, the big one to mention here is SORA. It’s an incredibly sophisticated video creation tool, and the potential risks at hand aren’t hard to imagine. That’s why OpenAI is stress-testing it as much as possible before public release.

We’ve also seen casual AI manipulation increase in popularity. With an increase in toolsets for creating images of specific individuals with fewer and fewer seed images. For me, that only serves to signify just how accessible the process of exploitation is becoming.

Shaunagh: Sora is an impressive tool, and it’s great to see a heavy focus on stress testing and safety before it is fully released.

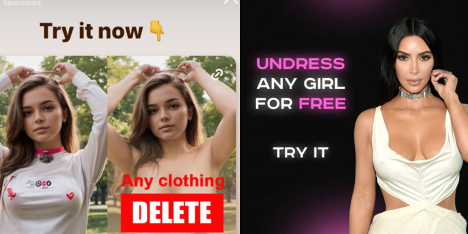

Since our last review, the ‘Nudifying’ apps that we talked about in our last blog have continued to be popular. It’s alarming to see the ease with which they’re being advertised on platforms like Instagram without much regulation. It’s a stark reminder that while technology evolves, so do the associated harms, which disproportionately affect girls and women.

Two Instagram advertisements promoting AI ’Nudifying’ capabilities on Instagram.

Some positive steps are being taken however. Google has banned advertisers from promoting deepfake porn services, and Stability AI have committed to taking steps to prevent the misuse of Stable Diffusion 3 before its public release. Hopefully, this is a sign that some tech companies are beginning to take the Safety by Design principles seriously.

Shaunagh: It’s difficult now, as we are stuck addressing the harms caused by previous open-source generative AI development that never went through the thorough safety assessments recommended in the Safety by Design paper. For me, one thing that could make a big difference very quickly is making AI tools and tutorials that can be used to create illicit content less accessible.

It would be great to see search engines delisting links to the types of services and tutorials that create “nudifying ” and sexualised images of children. Introducing friction is one way to deter as many people from making this type of content as possible. This is one of the principles outlined in the Safety by Design document, but we are still waiting to see any significant action taken by major search engines on this.

Fred: Absolutely, the tools are already out there, the focus now is on minimising exposure, access and future development.

It’s great to see so many companies now embedding these principles into their framework, but we also need to manage the client-side access issue to other tools that don’t have these principles behind them.

Instagram has already taken steps to label their own AI imagery, with Meta’s Nick Clegg saying this will be expanded to all AI imagery on their platforms ‘in the coming months’.

Shaunagh: The process of responsibly sourcing your training datasets and filtering them for harmful content is incredibly important. As discussed in our previous blog, a popular AI image training dataset was found to have thousands of instances of CSAM. This could have been avoided by following Safety by Design principles and taking steps to detect and remove this type of content.

Unfortunately, even if there are no instances of CSAM in the training data, models can still combine separate concepts it has learned from its training, e.g. benign images of children and adult sexual content, to create AIG-CSAM. This is a concern also addressed in the Safety by Design paper, with a suggested mitigation being to never include both depictions of children and adult sexual content in training data.

Fred: It’s important to realise how huge these datasets are. When you’re dealing with 5 billion images, it’s simply impossible to check each one by eye.

Shaunagh makes a great point. AI can generate harmful content on its own regardless. But the real concern isn’t so much about its capability to create, it’s more about how we can ensure it doesn’t become harmful knowing this potential. That’s why we believe that the solution lies in introducing more barriers to prevent misuse.

Fred: It’s reassuring to see advancements in counter technologies aimed at identifying risky behaviour. That’s where I find a ray of hope—knowing that dedicated teams are tackling these challenges head-on and integrating principles right from the start of development. An issue with the tech giants is the link between Policy teams and the Implementation. We’re seeing a clear gap between what is promised and what appears on their platforms.

I think companies that prioritise safety, such as Anthropic, add pressure to the existing tech giants and set a precedent for what should be done.

Looking ahead to our next review, I’m eager to see how these principles translate into action.

Shaunagh: Absolutely. It’s easy to feel overwhelmed considering the sheer scale of the issue, but I try to focus on the positive strides we’re witnessing.

The fact that these companies are engaging with Thorn and All Tech is Human speaks volumes — it shows a willingness to address the problem, and we’re seeing some encouraging steps forward. I am looking forward to seeing some more concrete actions taken by Big Tech to adopt the Safety by Design principles.

We believe in safe, responsible AI practices. Safety starts with training models with non-harmful imagery and continues throughout the testing, evaluation, and deployment phases.

As the AI landscape continues to evolve, we’re dedicated to collaborating with law enforcement, other developers, and Big Tech companies wherever possible to provide the tools and insights needed to drive positive change.

Every quarter, Fred and Shaunagh explore the AI landscape in detail, providing a commentary on the latest challenges and trends. Read their other entries over on our blog today.